Fed Report: Generative AI and Emerging Cyber Threats

Sep 22, 2024 By Triston Martin

According to federal authorities, generative AI can also showcase cybersecurity risks. In this case, businesses must hire cyber threat intelligence services to prevent cyber threats. According to the FED, Generative AI security issues include how individuals utilize technologies within and outside enterprises. Generative AI technologies make social engineering harder and simpler for hackers to start by targeting victims with credible text, photos, video, and speech.

With generative AI, most of the internet can hack into websites, software, and profiles. Leaders in corporate technology and cybersecurity now confront fresh dangers on a perhaps never-before-seen scale. Let's examine how businesses may immediately identify such threats and cope with cyber threat management.

Generative AI Cybersecurity Risks

Hackers use generative AI to utilize it for unethical purposes better. The same features that make generative AI excellent at reacting to events and spotting dangers can be used against it. Cybercriminals are able to bypass protection if you dont have cyber threat monitoring services. Some risks include:

Phishing and Social Engineering

Given generative AI, cybercriminals can now design very convincing phishing assaults. Attackers deceive users into disclosing personal information or installing malware by using artificial intelligence (AI) to create tailored communications that seem like official correspondence. Because of this complexity, phishing efforts are more successful, as it is more difficult for receivers to discern between bogus and legitimate emails or texts.

Malware Development

Generative AI is capable of designing and creating constantly changing malware. By adjusting to varied surroundings, artificial intelligence (AI)- generated malware may circumvent conventional antivirus software and detection techniques. This adaptive malware's capacity to evade security system detection and neutralization raises the likelihood of a successful cyberattack. This can be prevented by hiring cyber threat intelligence services.

Exploiting Vulnerabilities

Artificial Intelligence (AI) can scan software, systems, and even people for possible vulnerabilities via programming. This allows attackers to identify weaknesses that human operators may overlook. By locating and taking advantage of these weak areas, cybercriminals can conduct more successful and focused assaults, often with a greater degree of accuracy and efficacy. Cyber threat management can prevent this.

Automated Hacking

Attackers can carry out extensive assaults thanks to the automation of hacking procedures made possible by generative AI. AI systems are capable of completing difficult jobs quickly and with little assistance from humans. Because these automated assaults are constantly changing and adapting to the security measures they come across, they are often more difficult to identify and neutralize.

Fake Written Content

Using generative artificial intelligence, attackers can create fake text that seems like a real conversation. The exploitation of using counterfeit material to impersonate people or deceive others can occur in real-time digital interactions. For instance, even if they are not native English speakers, fraudsters can craft complex phishing communications in perfect English, which makes identification more difficult.

Fake Digital Content

Avatars, social networking profiles, and password-stealing websites are AI-generated fakes. These false content appear and behave like genuine ones, allowing a large network of fraudulent deals. Cybercriminals can steal logins and sensitive data by creating fake websites or accounts.

Fake Documents

Safeguarding an AI system's whole lifetime, from data gathering and model training to deployment and maintenance, is known as "securing the AI pipeline." This includes safeguarding against unwanted access or manipulation, maintaining the integrity of AI algorithms, and securing data required to train AI models. Defending against new threats also entails upgrading the cyber threat monitoring on a regular basis.

Deepfakes

Deepfakes are audio and video clips produced by regenerative AI that can fool viewers by imitating real people. People are increasingly using AI to create actual deep fakes that mislead them. The appearance of deep fakes in security or video call footage makes consumers distrustful and simplifies social engineering frauds. These movies can push viewers to provide sensitive information or take risks.

Generative AI is capable of creating lifelike speech simulations that mimic bosses or senior executives. Attackers can use these AI-generated audio messages as false instructions to persuade staff members to transfer money or divulge private information. This kind of deceit is very successful because it takes advantage of workers' confidence in their managers. You can tap deepfakes with the help of cyber threat intelligence services.

Securing the AI Pipeline

When AI systems handle private or sensitive data, it's crucial to prevent the compromising of such data. Ensuring the dependability and credibility of AI systems is vital to their adoption and efficient use. Preventing the manipulation of AI systems can have dire repercussions, ranging from disseminating false information to inflicting bodily damage in surroundings under AI control. Securing the AI pipeline is critical for several reasons:

- Protect sensitive data: Crucial for AI handling personal or confidential information.

- Ensure reliability: Vital for AI acceptance and effective use.

- Guard against manipulation: Prevents misinformation and harm in AI environments.

- Follow best practices: Data governance, encryption, secure coding, multi-factor authentication, and continuous monitoring.

Precaution Businesses Must Take

Generative AI lets hackers launch larger, quicker, and more diversified assaults. First, companies should assess their security measures, including their present systems, uncover weak points, and make improvements to their cyber threat management to strengthen protection.

Reevaluating employee training programs is also necessary since cybersecurity is a shared responsibility inside the company. Companies can assist in reducing the risk of assaults by training staff members on how to recognize and react to threats and the possible hazards associated with generative AI. In addition, organizations should think about cyber threat monitoring and the following if they want to remain ahead of new dangers based on AI:

- Utilize Secure Access Service Edge (SASE) and Zero Trust Network Access (ZTNA) techniques to shift trust from the network perimeter to ongoing user, device, and activity monitoring.

- By using security services like Endpoint Detection and Response (EDR) to provide real-time input on developing threats at the network edge, you can concentrate on mitigating attacks more quickly.

- To strengthen your defenses and contribute to leveling the playing field, consider using AI security and automation technologies or hiring cyber threat intelligence services. By separating genuine threats from false alerts, artificial intelligence (AI) may help reduce noise in the system and free up your security personnel to concentrate solely where they are most required.

-

FinTech Sep 08, 2024

FinTech Sep 08, 2024Hybrid Agencies: Managing Remote Work and Global Teams Effectively

A hybrid agency offers flexible marketing services that are suited to each client's needs by combining the knowledge of in-house and outside experts.

-

Currency Jan 13, 2024

Currency Jan 13, 2024Help you understand crypto assets

First introduce how the ownership and control power of crypto assets changes, the essence and transaction principle of crypto assets, and then introduce the characteristics of Bitcoin, which is a digital currency system, and finally describe the role of nodes in this system.

-

Currency Oct 20, 2024

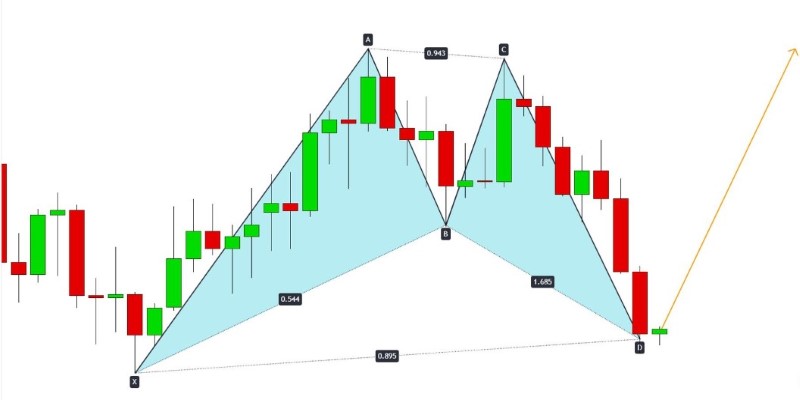

Currency Oct 20, 2024Predicting Forex Reversals with Harmonic Patterns: A Comprehensive Guide

How to use harmonic patterns for effective forex trading in 2024. Understand key patterns like Gartley and Butterfly, and how Fibonacci retracement helps traders forecast price movements

-

Business Sep 29, 2024

Business Sep 29, 2024The Importance of Financial Support in Local Communities

Discover the way the Farmers and Merchants Grant Program is helping businesses in Long Beach and improving effects on the community.